How do digital images and videos display colour?

Digital image and video objects record and store colour information in different ways depending on their format and encoding methods. When creating visual digital media content, we usually want to have the best quality image possible, but with the smallest file size possible to allow for fast internet download and rendering by software applications.

Topics covered on this page

Resolution

Both image and video files have properties such as Resolution, Width, Height, and File size.

Resolution is how much detail the image holds. This is measured by pixel count in digital images and video. Pixels are tiny squares or dots of colour that form the rectangular grid of an image or video’s visible area. The more pixels in your image, the higher the resolution and the more detail or information stored in the image. This also means better quality and colour.

Image resolution is important to understand if you’re working with screen and print media. We generally use a lower resolution image for screen media and content on the Internet because we want fast loading times, and our screens have a limited resolution display. For printing, a higher resolution is better for image quality. Printers can print at different resolutions depending on their capability. Commercial and professional printers can produce extremely high-resolution imagery necessary for fine art photography and publications. Your home inkjet printer can also print at different resolutions, depending on the quality of the printer.

Resolution in images is measured by the number of pixels in width multiplied by height, but also the density of those pixels – measured by dots per inch (DPI), which is how many dots/pixels are in a square inch. The higher the DPI, the better quality the image will be.

The image in Figure 3.25 on the left is low-resolution. It only has 1% of the information contained in the high-resolution image on the right. Select the image to enlarge.

Have you ever seen an image on a webpage that looked great on your computer, but when you try to print it, it looks terrible? This is because the screen image has a low resolution – possibly 72 DPI – and low dimensions – possibly 800 pixels width x 600 pixels height or less.

Your printer can produce images that are anywhere from 150 to 1200 DPI (or higher, depending on the printer), so your small image doesn’t have enough pixels to produce a good quality print image.

It’s important to save your image at the right resolution and dimensions for its purpose, and to use the right colour system – RGB for screens, CMYK for print.

Also important!! Once you have reduced an image’s resolution – width/height or DPI – and saved it, that information is lost. If you try to increase the resolution, your editing software compensates by duplicating or replacing pixels and filling in the space according to its encoding system, but it cannot replace that lost information, so you’ll still see a blocky and pixelated image. This affects the colour as well – the lost colour information cannot be replaced.

Many software editing applications have processes that attempt to compensate for the loss of information, and more recently Artificial Intelligence (AI) is increasingly used to ‘intelligently’ enhance image quality by replacing the lost information or repairing images that are damaged or blurry. These tools are useful, although if you work with very high-resolution images, image enhancement is no match for retaining the original image information.

Learn more about AI image enhancement from this article about AI super resolution.

Compression and encoding

More pixels results in a bigger file size – which is the number of bytes (kilobytes, megabytes, gigabytes, terabytes, etc.) in the file. This can be problematic with video – even a short video can have thousands of frames and in theory, each frame is an image. Video files are much bigger in file size than still images.

Image and video files also store colour information in different ways.

Image file formats

RAW is a raster image format (based on a fixed grid of pixels) used for unprocessed images usually captured with a camera. There are many different RAW image formats, and these are often dependent on which camera you use. RAW images are ultimately converted to another format to be used for digital media (JPEG, PNG) or print (TIFF, high-quality JPEG). Your camera or mobile device may have the settings option to save images as RAW or JPEG.

Note: RAW images will take up more file space on your device.

Think of a RAW image like a traditional physical photographic negative that can be reproduced in multiple formats (although a RAW image is not a negative image). Keeping a RAW version of an image means that you have all the original information of the image, which can then be altered using non-destructive editing techniques to achieve a particular result. For example, you might change the exposure, brightness, contrast, and colour values of an image in your editing software and save that as a TIFF image file for printing, but you still have the original RAW file to return to if you want to create a different version of the image with different colour values.

Learn more about RAW

Learn more about non-destructive editing.

GIF (Graphics Interchange Format) was one of the first raster image formats used on the Internet. It has a very limited palette of colours available – only 256 colours. This is partly because it was created when bandwidth was much lower and slower than it is today. Only the smallest of image file sizes could be used to share images across networks or display graphics on web pages. Also, screens only displayed 256 colours at that time, so there was no requirement for more colour information. The innovative compression used in GIF identifies repeating patterns, then simplifies them, allowing for lossless compression of files – meaning none of the data is trimmed in the shortening process (Lempel-Ziv-Welch compression algorithm).

GIF images can save different minimal colour ‘palettes’ – you can choose to save only the colours used in the image (indexed colour), which makes the file sizes very small. Because of the limitations of GIF image colour, it is a good format for simple graphics like logos but not good for photographic images. You can also save transparency in a GIF image – which is useful for something like logo design, where you might want a transparent background so that the same image can sit on different coloured backgrounds – for example, in webpages.

GIF was the first common image format that allowed animation (WebP images also allow animation, but are not commonly used at this time). Animated GIFs are multi-frame images that hold information like frame rate and looping properties. They have had a resurgence in popularity on social media and in meme culture because they are usually very short in timeframe and small in file size, therefore easy to access on mobile devices.

Learn more about GIF:

Smithsonian magazine: A Brief History of the GIF, From Early Internet Innovation to Ubiquitous Relic.

The JPEG (Joint Photographic Experts Group) raster image format became a standard image format in the late 1980s and early 1990s. It allows for millions of colours in an image (24-bit colour), which is good for capturing photographic images. JPEG uses a system of lossy compression to reduce the amount of information in an image file and therefore reduce the image file size – making it a useful image format for the Internet.

It’s called ‘lossy compression’ because visual data that the human eye can’t see is removed, and colour variation is averaged, so some of the image information is lost in the compression process. You might have seen low quality JPEG images on websites or social media where the image looks blocky or has visible ‘artefacts’ (noise or aliasing on the edges) – this is due to the JPEG format compression.

The user can control the level of compression in a JPEG image – more compression results in a lower quality and smaller file size. Less compression results in a higher quality and bigger file size. Your image editing software will give you compression options when you save your image as a JPEG/JPG file.

Learn more about JPG:

SVG (Scalable Vector Graphic) is a very different kind of image format. It is not a resolution-dependent raster image format, rather, it is based on vector graphics, which are mathematical statements that describe lines, curves, shapes and colours on the Cartesian plane (x,y axis). This makes it scalable, so no matter how big or small you size the image, it will retain its quality – it redraws the geometric information at any size. Because an SVG file stores mathematical information and not pixel information, SVG files are generally very small and can also be used in websites as XML code.

This kind of graphic is generally not used for photographic images – calculating the vector maths for a complex photographic image would be less efficient than using pixels and compression formats. However, vector graphics are very useful for visual materials that need to be reproduced at different sizes in both digital and print media, such as advertising graphics output like posters, banners, billboards, signage, etc. Other common vector image file formats that digital media creators may encounter include EPS, AI, and PS.

There are many, many more image file formats that are useful to know about when creating digital media, such as:

- PNG – (Portable Network Graphic): raster image format good for photographic images, supports lossless compression and has millions of colours, transparency (PNG-24), and is commonly used in webpage design.

- TIFF – (Tag Image File Format): raster image format popular with photographers for storing lossless, high-quality images for printing.

- BMP – (Bitmap): a high-quality, uncompressed raster image format. Originally created by Microsoft.

- HEIC – (High Efficiency Image Format): a more recent raster file format that has better quality than JPEG, using HEVC (High Efficiency Video Coding) for compression. It is now commonly used in Apple devices. A HEIC image may be half the size of a JPEG but with higher quality.

Adobe: HEIC vs JPEG. - WebP is a more recent raster image format developed for the web. It has better quality lossless and lossy compression compared to either PNG or JPEG. It uses predictive coding to compress the visual data, resulting in comparatively small file sizes, and is currently supported in recent browsers. You can also have animated WebP images.

Google developers: WebP.

There are also native image file formats that are the image files created by particular software applications. For example, Adobe Photoshop has a native file format that has the extension PSD. This file can only be used by Photoshop – you have to export a JPEG, PNG, GIF or another type of file from the Photoshop PSD file if you want to use your image on the Internet for example.

Learn more about image file formats from this video (14 minutes):

Digital video properties

Video files without compression are so big in file size that they would be very slow to download – even with very fast Internet – and difficult to play without lagging and buffering.

If we didn’t have compression, you wouldn’t be able to stream videos from platforms like Netflix or Google Play.

A video file has certain properties:

Container – type of file – MP4, AVI, MOV, etc.

Dimensions/resolution – width and height in pixels (Figure 3.26).

Some common video resolutions:

- SD (standard definition) any video with a height of 480 pixels or less

- HD (high definition) – 1280 x 720 pixels

- full HD – 1920 x 1080 pixels

- 4K UHD (Ultra HD) – 3840 x 2160 pixels

- 8K (full ultra HD) – 7680 x 4320 pixels

This image shows the difference in video resolutions – select the image to enlarge

from a photo by Babelphotography via Pixabay, licensed under CC0.

Learn more about video resolution here… Typito: video resolutions

Frame rate – how many frames per second (fps): 24 fps has been a standard frame rate for many years, 30 fps is used for TV broadcasts, 60 fps is becoming more common in 4K HD video, and for capturing slow motion footage, we see frame rates of 120 fps and higher, captured by high-speed cameras that can handle such high frame rates.

Duration: the length of a video in hours, minutes, and seconds (HH:MM:SS). For example, 01:30:24 describes a video duration that is one hour, 30 minutes and 24 seconds long.

Bit rate (bitrate) is the amount of data stored per each second in a media file (video and audio). It’s measured in megabytes per second (MBPS).

- A high bitrate is associated with a high resolution and/or low compression, which means bigger file sizes.

- A low bitrate is associated with a low resolution and/or high compression, which means smaller file sizes.

As with still images, you can’t turn a low-quality video into a high-quality video because there isn’t enough data about the pixel colours in each frame to increase the resolution or replace the data lost in compression. AI tools may be able to fill in and define low-resolution video to improve quality. For example, grainy, low-light video footage can be smoothed and better defined, but this functionality is limited and is not equal to capturing high-resolution video in the first place.

Video Codecs

Codec (a portmanteau word for coder-decoder) is the software encoding method that compresses video data and stores it in the container (file) so it can be decompressed and played back.

Video compression, as with image files, reduces the amount of colour information stored in the video file without visually altering what the viewer can see. Most video codecs are lossy – some of the data of an uncompressed video file is lost in the compression process, necessary to make file sizes small enough to play back smoothly and download quickly or stream at the right speed.

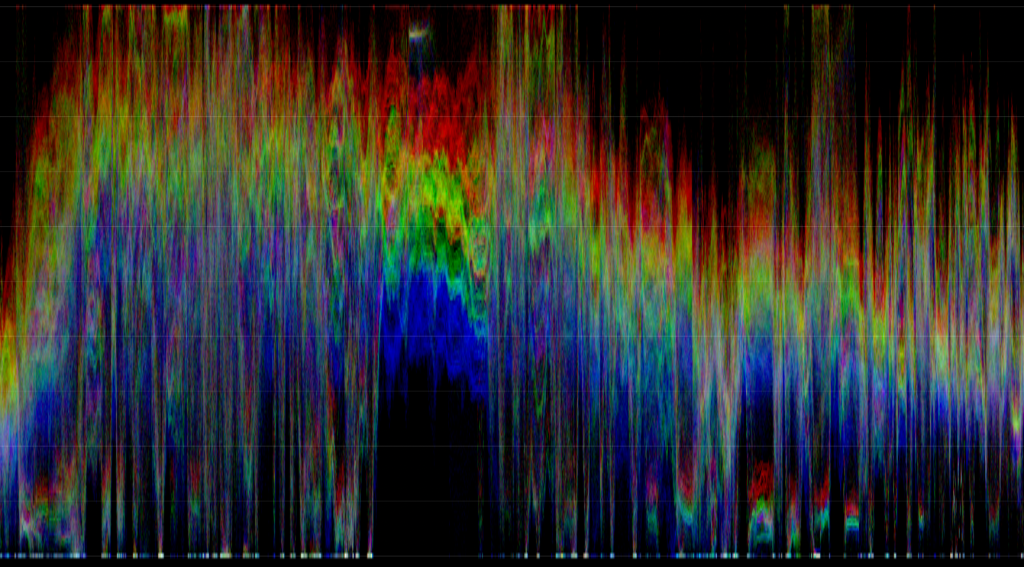

There are many different kinds of video and audio codecs, and they all use different compression methods to reduce file size. Codecs in video work by reducing the data by motion compensation, macroblocks (large blocks of pixels that have the same colour or motion) and chroma subsampling.

Motion compensation uses keyframes – I-frames, P-frames and B-frames. I-frames contain the complete image, whereas P-frames look for which pixels have changed and only record the information for those changed pixels. I-, P- and B- frames make up what we call “groups of frames”, and this method can reduce the size of the video file by up to 90%.

Chroma subsampling reduces the amount of colour information by using a different colour space instead of RGB. It’s a complex system to understand: the YCbCr colour space works for video because the human eye is more sensitive to changes in brightness than changes in colour. YCbCr represents the following colour information in video:

- Y (luminance or luma)

- Cb (blue-difference chroma)

- Cr (red-difference chroma)

luma = brightness, chroma = colour

Some common video formats and their codecs:

MP4 (MPEG 4) – the MP4 container is the current industry standard for HD video. It most commonly uses the H.264 codec (also known as Advanced Video Codec or MPEG Part 10), which supports up to 8K video. It produces high-quality video at a low bitrate which means smaller file sizes – useful for streaming video content online.

Wikipedia: Advanced Video Coding.

MOV (Quicktime video format) is an Apple standard video format that usually uses MPEG-4 compression. In fact, the MPEG-4 format evolved from the Quicktime file format (QTFF) around 2001. MP4 is a revised version of this.

AVI (Audio Video Interleave) is a Windows standard video format created by Microsoft in 1992, which commonly uses the DivX codec.

Learn more about video formats, containers and codecs from this video (14 minutes):

Learn more about video here:

- Basics of video encoding

- Wikipedia: comparison_of_video_container_formats

- Wikipedia: comparison_of_video_codecs

Lagging in digital video and computer games means the video or game does not play smoothly at the right frame rate and might stop playing while the software catches up with downloading the necessary files.

Buffering in digital video means preloading some of the video data before playing it – common in streaming video from the Internet.